We talk to a lot of analysts at Lab41. A recurring theme of these conversations is what they frequently refer to as “result provenance.” Translation — “Are these results any good? Can I trust them? I don’t have a whole lot of time to research them any further, so will these results hold up under scrutiny?”

These questions aren’t new. The old adage about lies, damn lies, and statistics has been around for just about forever. These questions become a lot harder to answer though with the advent of semi-automated analytic techniques such as Machine and Deep Learning. Few data scientists, let alone your average analyst can answer these questions in a world dominated by such concepts as cold-start, back-propagation, hyper-parameters, sigmoid smoothing functions, batch normalization, yada, yada, yada. The immediate gratification of rapid results are tempered, and complicated by the reality that it is hard to know what data was used to train the network. Familiarity with the given data also may not be sufficient as one of Deep Learning’s greatest attractions is how it discerns discriminating features humans were not aware of. Validating that an autonomous vehicle is adhering to rules of the road is one thing. Knowing that the model chose the right peptide sequence from a set with many thousands of subtle protein differences may be an entirely different matter. These issues can only worsen, as ML/DL techniques become more pervasive. This is not to disparage ML and DL, quite the contrary. The concern here is more about how best to deal with the side effects of increased complexity and opacity that accompany these powerful technologies.

One way experts try to deal with uncertainty is to publish various types of quality/trust scores with the results. This type of self-attestation has limited value, however. As has been documented repeatedly in the cyber security field, entities vouching for themselves cannot always be trusted to tell the truth. This is how a lot of malware propagates across a network. Ideally such self-attestation should be corroborated by a third party (or multiple).

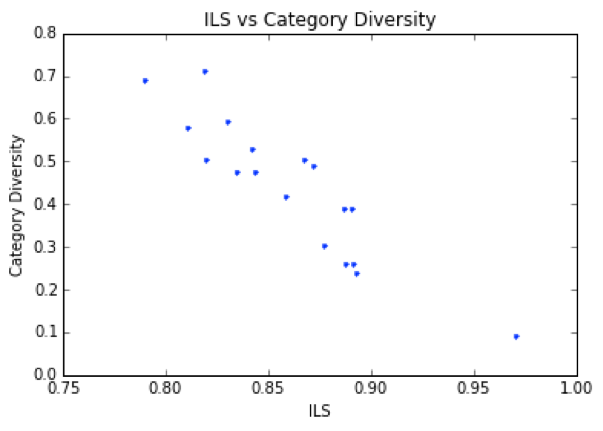

So what is an analyst to do? How do they know if the models they are using are the right ones for the data at hand? How do they detect and compensate for training bias or model over fitting? At Lab41 we have developed frameworks such as Circulo (where we built a tool to help analysts make better decisions on which community detection algorithm to use) and studied recommender systems to see if we can do a better job of matching data and algorithms. Recent research focused on what a neural net is paying attention to at inference time also looks very promising and hopefully the technique will transfer over to other data types.

My goal here is not to come up with an answer. Rather, what I hope I have done is sufficiently piqued your interest in this aspect of ML and DL that we want to mitigate.

Lab41 is a place where experts from the U.S. Intelligence Community (IC), academia, industry, and In-Q-Tel come together to gain a better understanding of how to work with — and ultimately use — big data.